Introduction to Big O Notation

Big O notation is a mathematical concept used to describe algorithm efficiency. Rather than focusing on precise measurements (such as actual execution time or CPU usage), it emphasizes how algorithms scale with input size, their growth patterns, and worst-case scenarios.

The three fundamental principles of Big O Time Complexity are:

- Growth is relative to input size

- Constants are ignored

- Worst-case scenarios are typically what we measure

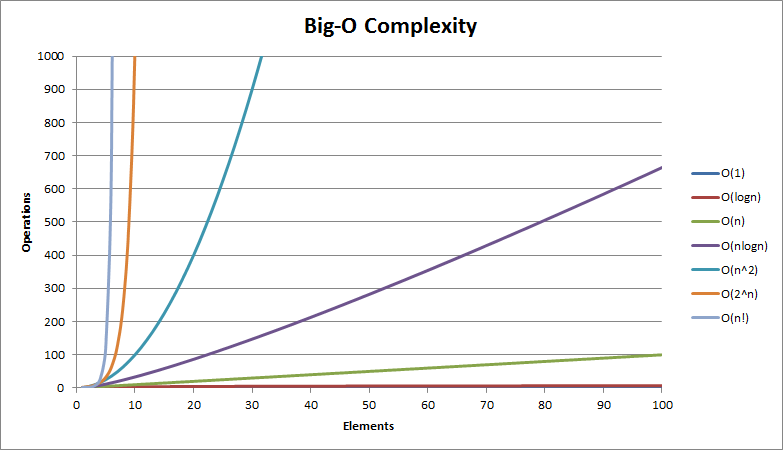

Common Big O notations include O(1), O(log n), O(n), O(n log n), O(n²), O(2ⁿ), and O(n!). These notations primarily focus on algorithm performance in worst-case scenarios, helping us make effective comparisons and informed decisions when designing and selecting algorithms.

Common Complexities

This is why we use Big O notation: it helps us make informed decisions about which data structures and algorithms to use. Understanding their performance characteristics enables us to write optimal code.

Reference

Algorithms & Data Structures | Learn Algorithms with TypeScript for Interviews | Frontend Masters